Generalization (Qing Qu)

Qing Qu (qingqu@umich.edu) is an assistant professor in the EECS department at the University of Michigan. Before that, he was a Moore-Sloan data science fellow at the Center for Data Science, New York University, from 2018 to 2020. He received his Ph.D from Columbia University in Electrical Engineering in Oct. 2018. He received his B.Eng. from Tsinghua University in Jul. 2011, and a M.Sc. from Johns Hopkins University in Dec. 2012, both in Electrical and Computer Engineering. His research interest lies at the intersection of the foundation of data science, machine learning, numerical optimization, and signal/image processing. His current research interests focus on deep representation learning and diffusion models. He is the recipient of the Best Student Paper Award at SPARS’15, the recipient of the Microsoft PhD Fellowship in machine learning in 2016. He received the NSF Career Award in 2022, and Amazon Research Award (AWS AI) in 2023, a UM CHS Junior Faculty Award in 2025, and a Google Research Scholar Award in 2025. He was the program chair of the new Conference on Parsimony & Learning (CPAL’24), area chair of NeurIPS, ICML, and ICLR, and action editor of TMLR.

Dr. Qu’s Related Work and Experience:

Dr. Qu’s seminal work [2] for the topic has won the Best Paper Award in the NeurIPS Diffusion Model Workshop in 2023. He has recently published a line of pioneering work on understanding the generalizability of generative AI models [1-5], and controlling diffusion models [6,7,9,10]. He has rich experience in delivering tutorials, with several previous tutorials given at ICASSP, CVPR, and CPAL. Moreover, together with Dr. Shen, he has developed a K-12 summer camp “AI Magic” on generative AI.

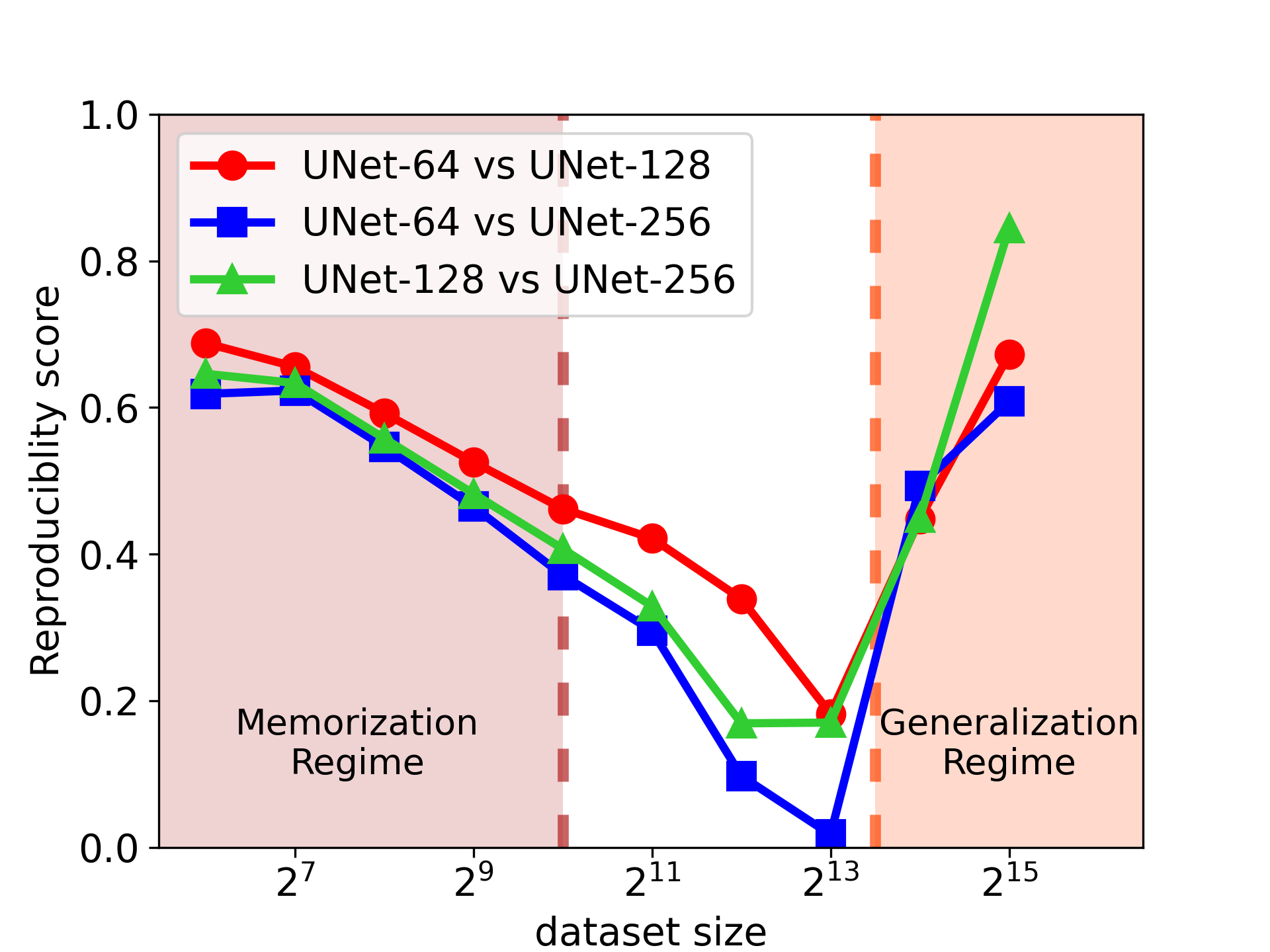

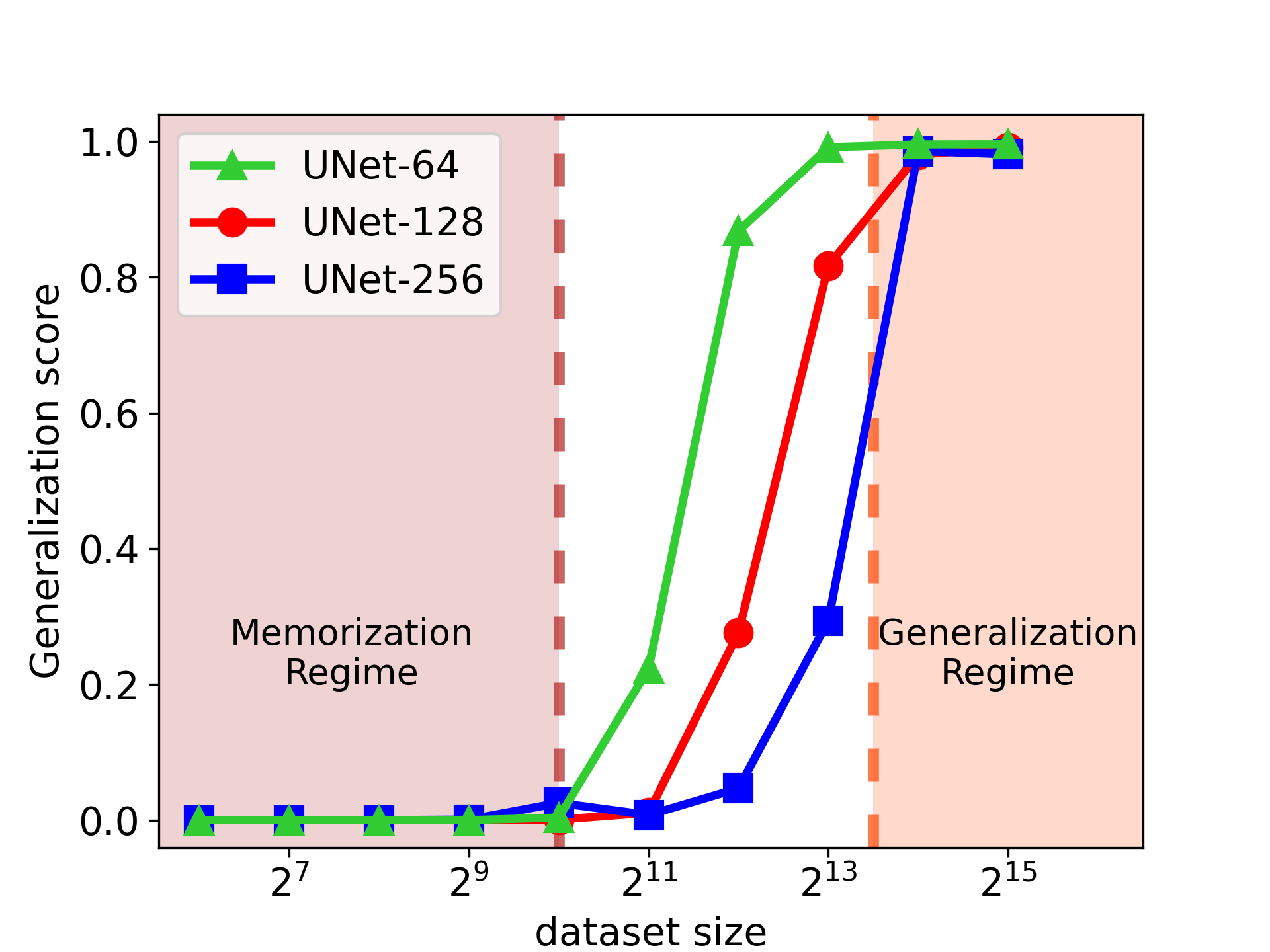

Figure 1: Co-emergence of reproducibility and generalizability of UNet architectures with three different parameter numbers (UNet-64, UNet-128, and UNet-256) in the denoising diffusion probabilistic model, which is trained on a varying number of images from the CIFAR-10 dataset. Figure courtesy of [2].

Papers

Overview Paper:

-

Huijie Zhang, Peng Wang, Siyi Chen, Zekai Zhang, Qing Qu.

Generalization of Diffusion Models: Principles, Theory, and Implications.

SIAM News, April 2025.

The Phenomenon of Generalization:

-

Huijie Zhang*, Jinfan Zhou*, Yifu Lu, Minzhe Guo, Liyue Shen, Qing Qu.

The Emergence of Reproducibility and Consistency in Diffusion Models.

International Conference on Machine Learning (ICML’24), 2024.

Preprint – PDF – BibTeX – Slides -

Huijie Zhang, Zijian Huang, Siyi Chen, Jinfan Zhou, Zekai Zhang, Peng Wang, Qing Qu.

Understanding Generalization in Diffusion Models via Probability Flow Distance.

Arxiv Preprint arXiv:2505.20123, 2025.

Preprint – Project Website -

Xiang Li, Yixiang Dai, Qing Qu.

Understanding Generalizability of Diffusion Models Requires Rethinking the Hidden Gaussian Structure.

Neural Information Processing Systems (NeurIPS’24), 2024.

Preprint – PDF – BibTeX

Theory on Generalization:

-

Peng Wang*, Huijie Zhang*, Zekai Zhang, Siyi Chen, Yi Ma, Qing Qu.

Diffusion Models Learn Low-Dimensional Distributions via Subspace Clustering.

Arxiv Preprint arXiv:2409.02426, 2024.

Preprint – PDF – Project Website

Implications

-

Siyi Chen*, Huijie Zhang*, Minzhe Guo, Yifu Lu, Peng Wang, Qing Qu.

Exploring Low-Dimensional Subspaces in Diffusion Models for Controllable Image Editing.

Neural Information Processing Systems (NeurIPS’24), 2024.

Preprint – PDF – BibTeX – Code – Project Website -

Wenda Li*, Huijie Zhang*, Qing Qu.

Shallow Diffuse: Robust and Invisible Watermarking through Low-Dimensional Subspaces in Diffusion Models.

Arxiv Preprint arXiv:2410.21088, 2024.

Preprint – PDF – BibTeX – Code – Project Website - Xiao Li*, Zekai Zhang*, Xiang Li, Siyi Chen, Zhihui Zhu, Peng Wang, Qing Qu. Understanding Representation Dynamics of Diffusion Models via Low-Dimensional Modeling. Arxiv Preprint arXiv:2502.05743, 2025.

Other Related Results:

-

Xiang Li, Rongrong Wang, Qing Qu.

Towards Understanding the Mechanisms of Classifier-Free Guidance.

Arxiv Preprint arXiv:2505.19210, 2025.

Preprint – PDF – BibTeX -

Siyi Chen, Yimeng Zhang, Sijia Liu, Qing Qu.

The Dual Power of Interpretable Token Embeddings: Jailbreaking Attacks and Defenses for Diffusion Model Unlearning.

Arxiv Preprint arXiv:2504.21307, 2025

Preprint – PDF – BibTeX -

Huijie Zhang*, Yifu Lu*, Ismail Alkhouri, Saiprasad Ravishankar, Dogyoon Song, Qing Qu.

Improving Efficiency of Diffusion Models via Multi-Stage Framework and Tailored Multi-Decoder Architectures.

Conference on Computer Vision and Pattern Recognition (CVPR’24), 2024.

Preprint – PDF – BibTex – Code – Project Website